Last week was an intense one for Latent Interfaces! I traveled to San Francisco to kick off a new community event called Hidden States and demo the latest version of Latent Scope for the Mozilla Builders program. Latent Scope 0.5 is a big revamp of the UI, with huge contributions from friend and former colleague Jimmy Zhang. It's been incredibly energizing to work through so many UX issues together, which I'll detail below after sharing more about Hidden States.

Hidden States is an unconference for people who want to work with ML below the API. We had 50 people from a variety of backgrounds come together for a full day of discussions on everything from sparse autoencoders to agents and autonomy. I wanted to recreate the vibes from the d3.unconf days, where no one person is able to know everything in an exciting new space, but coming together with curious practitioners creates opportunities to connect and learn. It felt like we achieved that aim, thanks to the hard work of my co-organizers Casey and Stella and the humble, curious and phenomenal attendees.

The event built on the energy of the recent Embeddings UX meetup in New York, with two panelists kicking off the day with inspiring keynotes: Leland McInnes and Linus Lee.

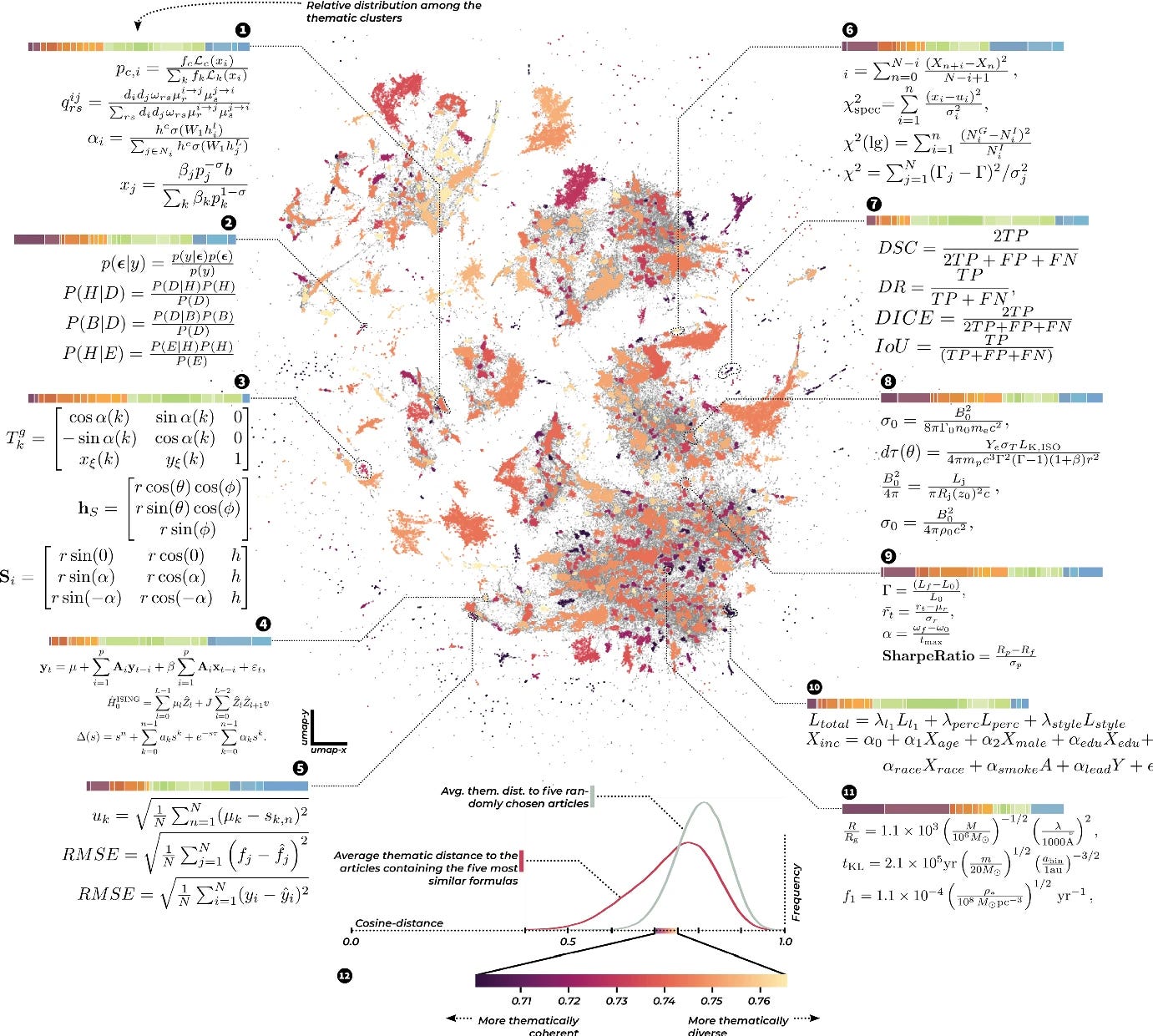

Leland gave a wonderful talk about the value of exploration and the challenges in trying to communicate high-dimensional data. He showed tons of interesting applications of UMAP across many domains, some of which you can see in the responses to this tweet. You can get a sense for the breadth of applications from the examples showcased in the UMAP docs including interactive, exploratory analysis and scientific papers.

Perhaps my favorite example from the talk was this paper mapping mathematical formulas across scientific domains. Learning

Linus gave a thoughtful prompt on representations and interfaces, covering a depth and breadth of perspectives that was very motivating. He considered the variety of representations humans have used to communicate from language to data visualizations as well as the emerging machine-friendly representations (hidden states!). Tying these together he encouraged us to think about the interfaces we have yet to design, looking at the ways direct manipulation and agency have been incorporated into all the tools and instruments we already have. We weren't able to record either of the keynotes but Linus does intend to publish the materials in some form, which I will be very excited to share once he does!

After the keynotes we had a full day of talking to each other, from big discussion groups on explorable explanations or agents to small groups chatting over lunch or in the tea lounge. The lightning talks were also a great hit, with 12 demos in 45 minutes we got to see many cool ideas and prototypes in rapid succession. The one thing we could have done better is coordinate the discussion topics more effectively. We either ended up with two big groups or dozens of tiny groups, and there is room to do a little more digital coordination for folks to find each other. Out of the chaos some great ideas emerged! We had a few "speed dating" sessions where people had 90 seconds to talk to someone nearby they hadn't met yet as well as splitting everyone into 3 groups and hosting essentially group therapy. We prompted folks to share their greatest challenges in their work, which my group agreed included developing UI (struggling with javascript and the browser).

Given the good feelings we got from finding a community of curious and kind people, we will certainly want to do it again! A not insignificant amount of people traveled from the East Coast to attend, so we may host the next iteration in a new place. Some feedback we got was that people wanted more time together, perhaps learning via workshops or hacking together. In the heyday of d3.unconf we had a 3-day event with workshops, discussion and hacking in that order. Perhaps this community will grow to such a gathering, though we always capped the attendance at 100 to keep the communal feel.

If this sounds like something you would like to be a part of in the future, fill in the registration form on hiddenstates.org and you'll hear from us as soon as we plan the next one!

Latent Scope 0.5

When I applied to the Mozilla Builders program I had to organize my thoughts and put together a plan for a focused iteration on the project. I realized that there was a lot of functionality exposed in Latent Scope but much of it felt duct taped together. The main goal, catalogued in this github milestone, was to refactor the UI and make the existing functionality more accessible and more robust. Thanks to help from the Mozilla team and huge contributions from Jimmy, we accomplished quite a bit!

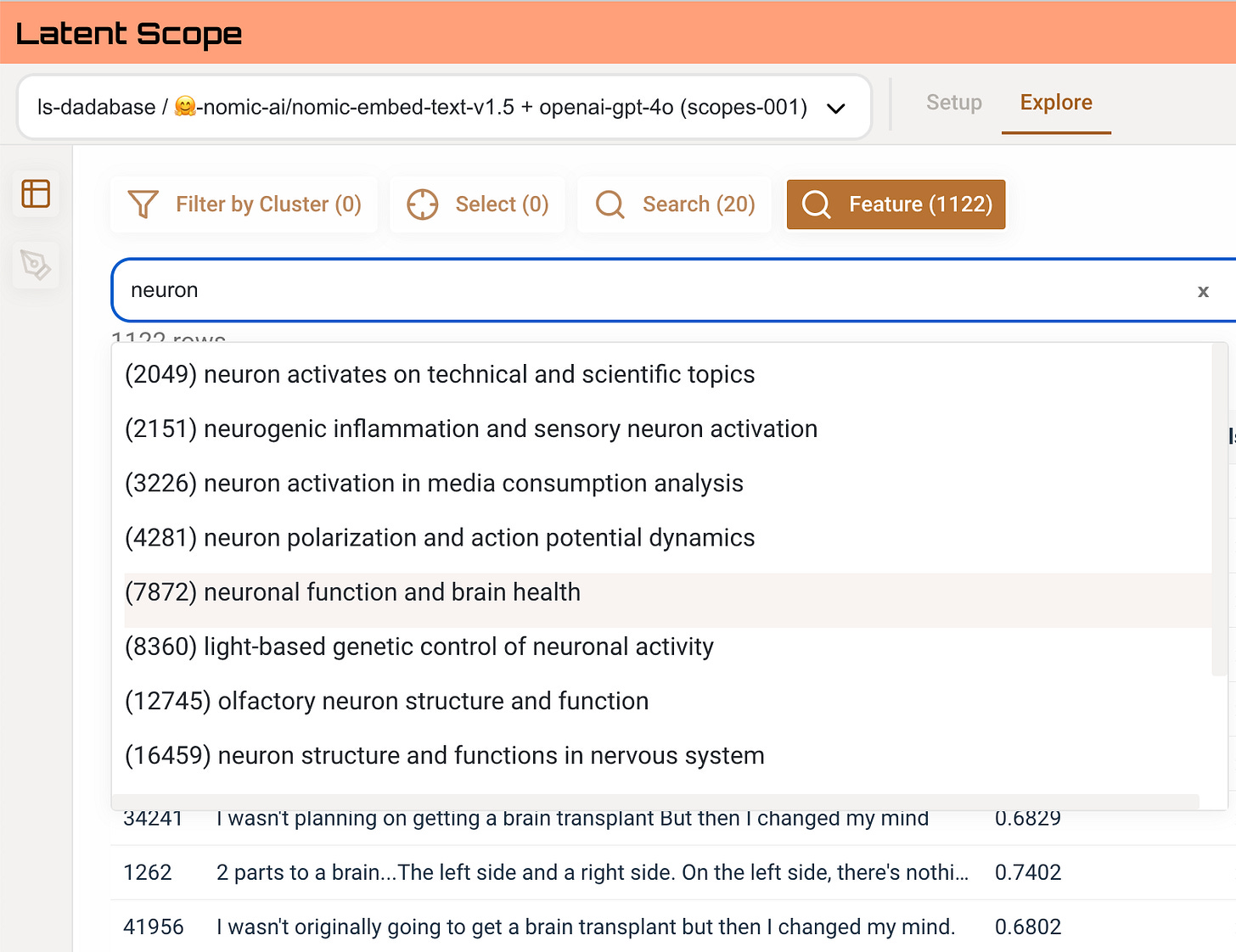

Some notable changes to the UX include a redesign of the Explore page, allowing the map to take up more space (and even dynamically resize the width of the table to give it even more prominence). The filtering system has also been cleaned up and streamlined, making it a bit simpler to reason about what is being filtered. We decided to cut out editing / curating features for the time being to focus more on the act of exploration, we felt that getting exploration right is a precursor to further editing or annotation.

One exciting new way to filter is by Sparse Autoencoder feature, if you've embedded your data with nomic-embed-text-v1.5 you can process it for SAE features from latent-taxonomy! We have more UI improvements on the way to visualize and process these filters, but I'm excited to incorporate SAEs as a first-class feature.

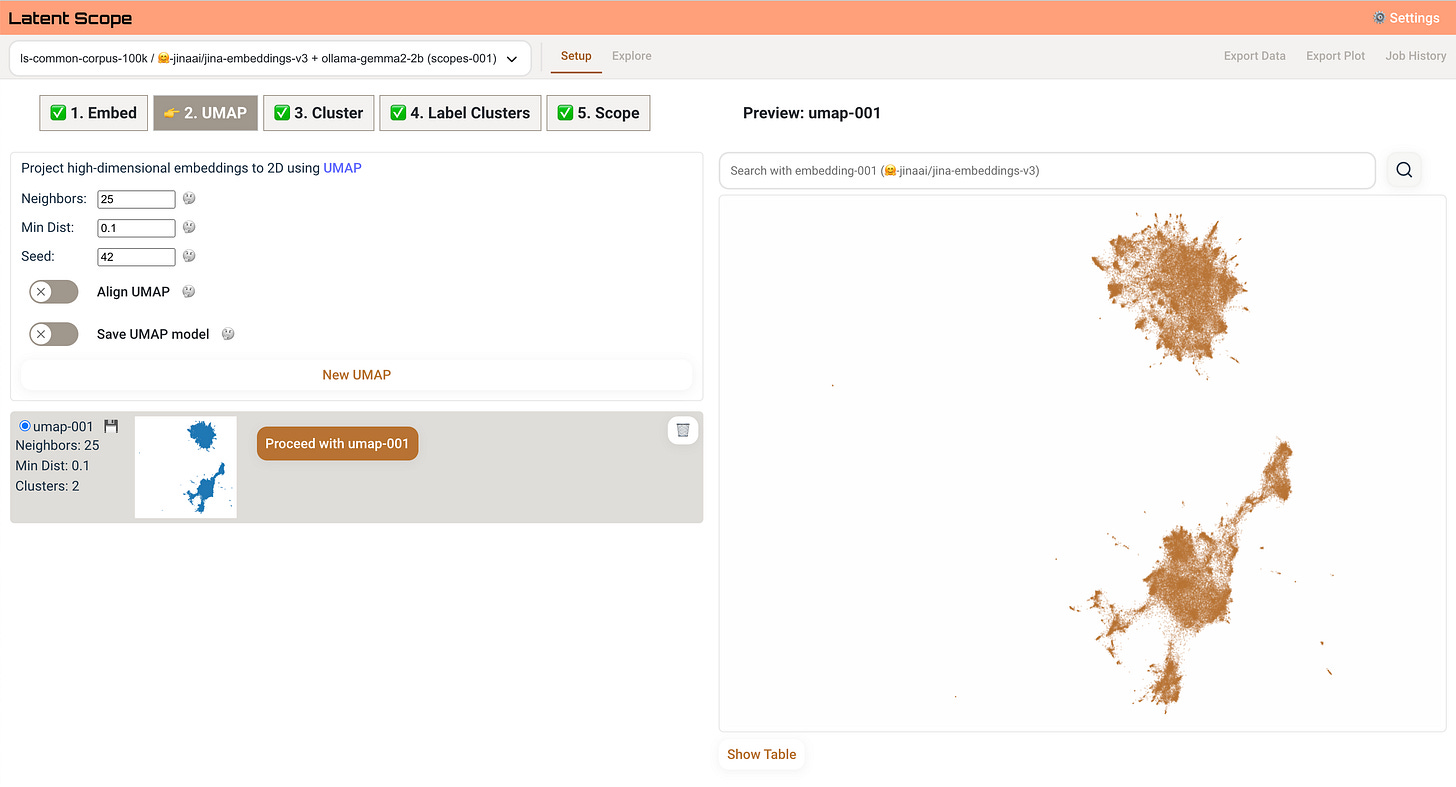

We also made a bunch of improvements for the Setup process, aiming to make it as straightforward as possible to go from text dataset to exploring. A big way we accomplished this was to make the process go step-by-step, dedicating the whole screen to each step one at a time. In this way there is always a preview of your data relevant to the setup step you are on.

We also made the cluster labeling functionality much more robust. You can search for chat models form HuggingFace, you can choose from any ollama models you have installed and you can even configure a custom url to hit any OpenAI compatible endpoint like a llamafile server. We also make it easier to control how many tokens you're using by choosing the number of samples to summarize, and they are sampled by distance from the center of the cluster.

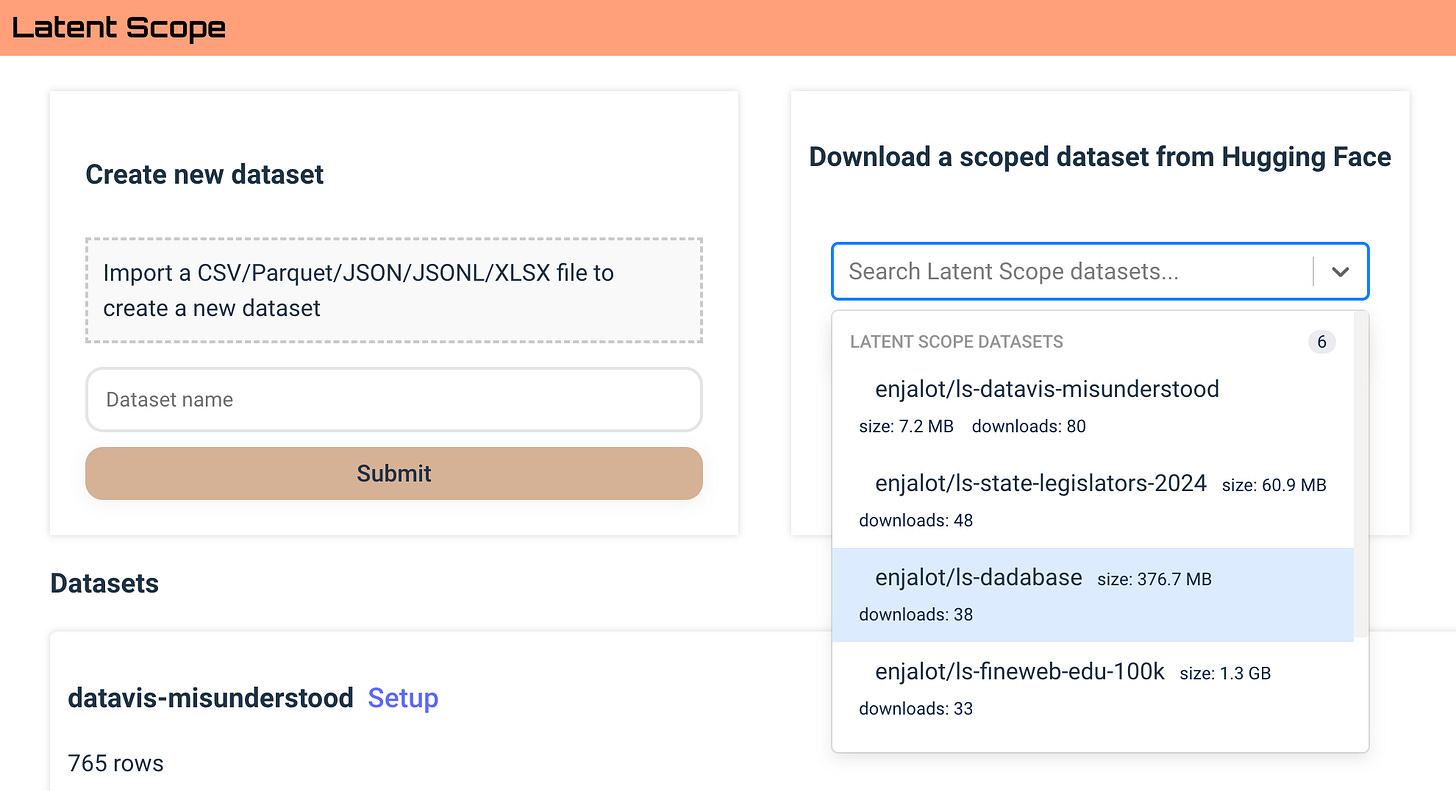

One last thing we added is the ability to upload and download scopes via HuggingFace datasets. This feels exciting to me because it made it much easier to share what I found while exploring new datasets. It was immediately useful in setting Jimmy up with good example datasets, and now I can finally share my dad-a-base!

What's next?

Right now we are actively working on improving the SAE functionality and making it easier to browse and filter by features. The next major functionality is supporting images and image embedding models like CLIP and SigLIP. I'm also looking into switching the backend from parquet files to lancedb files as it looks like it would improve both image storage and embedding storage as well as provide easy and performant nearest neighbor search. Lastly, we really want to have some kind of deployed version of the explore experience to make it even easier to share scopes!

We'd really appreciate any feedback on Latent Scope, you can respond directly to this email or fill out this form anonymously to help us improve in the right latent directions: https://forms.gle/8Kbm1xi44mqc2ojZ7

Thank you for reading!

P.S. Our discord has been growing as well with #hiddenstates, #latentscope and #sae channels, join the chat.